AI: Use it. Own it. Prove it.

January 7, 2026

TL;DR

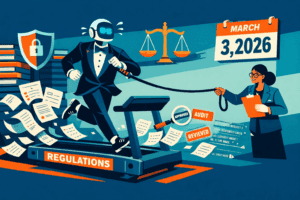

Mortgage lending is very regulated, and that makes AI feel like trying to wear a tuxedo while running a marathon. But AI is attainable if lenders treat it like a governed system with testing, audit trails, and clear ownership, not a shiny toy. Freddie Mac’s latest guidance makes this official. If you use AI or machine learning in loans you sell to Freddie, or in servicing, you need real governance, real risk management, and real accountability.

This is non-negotiable. This needs to be factored into whether any AI will really deliver the ROI it claims.

Put In Context

Mortgage is one of the most rule heavy industries in the U.S. That is not a hot take, it is Tuesday.

A mortgage lender has to follow federal and state laws, investor rules, agency guidelines, privacy requirements, record retention rules, and quality control standards. Then a regulator can show up and ask:

- Why did you approve this loan?

- Why did you deny that one?

- Show me your process

- Show me your proof

Now drop AI into that world.

AI is great at pattern finding, summarizing, drafting, sorting, and flagging weird stuff. It is also great at making confident mistakes, drifting over time, and being hard to explain when it matters most. The tension is from the fact that mortgage demands evidence but AI is inherently probabilistic.

And here is the twist. Freddie Mac is one of the first stakeholders to publish guidelines but they are surely not the last. And they are not saying do not use AI. They are saying, fine, use it, but govern it like you mean it. And their rules are effective March 3, 2026.

Why It Matters

If you are a lender, compliance is not a department. It is gravity. Everything you do has to survive:

- audits

- repurchase risk

- fair lending reviews

- data security reviews

- vendor oversight

- and the dreaded please explain this decision from 14 months ago email

AI can help lower cost per loan and speed up cycle times, but only if it does not create a new category of risk called “we cannot prove what happened.”

Freddie Mac’s direction matters because it sets the tone for what acceptable AI looks like in mainstream mortgage. The biggest message is this:

AI is no longer just tech. It is risk management.

Freddie’s guidance describes a governance approach where lenders must have policies and practices to map, measure, and manage AI risks, understand and document legal and regulatory requirements, and bake trustworthy AI characteristics into how they operate.

The Real Problem: Mortgage Needs Receipts

When lenders adopt AI, they run into a simple question:

Can we explain and defend what the AI did?

If the answer is kinda, that is a no.

In mortgage, as it relates to AI, you need:

- traceability, what data was provided and available to the AI

- consistency, does it behave the same way today as last month

- controls, who can change it

- audit evidence, prove it

A lot of generic AI tools were not built for that. They were built for speed, not scrutiny.

And scrutiny is kind of mortgage’s whole personality.

What Freddie Mac Is Basically Requiring, In Plain English

Here are the types of requirements and what they mean in practice.

1) A real AI risk management program

Freddie expects lenders and servicers to have organization wide policies and procedures to map, measure, and manage AI risk, and to have those controls be transparent and actually implemented.

Translation: We do not want Bob in Ops connected ChatGPT to production.

2) Know the laws that apply to AI, and document that you know

Freddie’s framework calls out that legal and regulatory requirements involving AI must be understood, managed, and documented.

Translation: If AI touches underwriting, pricing, disclosures, borrower communications, or servicing decisions, you need to show how you are handling fair lending, consumer protection, privacy, and more.

3) Trustworthy AI is not optional

Freddie expects the characteristics of trustworthy AI to be integrated into your policies and practices.

Translation: Your AI should be reliable, fair, secure, monitored, and governed, like any other high impact system.

4) Scale the controls to your risk tolerance

They expect processes to determine how much risk management you need based on your organization’s risk tolerance.

Translation: Using AI to draft an email is not the same risk as using AI to recommend an underwriting decision. Treat them differently.

5) Test for AI specific threats

Freddie’s guidance includes assessing for threats like data poisoning and adversarial inputs.

Translation: Bad actors can try to trick models, corrupt training data, or manipulate outcomes. Your controls have to assume this happens.

6) Monitoring and audits

Freddie calls for ongoing monitoring and regular internal and external audits, plus monitoring for performance issues, security breaches, and bias.

Translation: Set it and forget it is dead. AI needs a check up schedule.

7) Align to security standards

Freddie references audits tied to standards like NIST 800-53 and ISO 27001.

Translation: Your AI environment must meet serious security expectations.

8) Code of conduct and segregation of duties

Freddie calls for clear policies and codes of conduct and segregation of duties so the people who benefit from AI are not the only ones approving risk.

Translation: Do not let one team build it, bless it, and grade their own homework. This should be consistent with any other software your team uses.

9) Indemnification: you own the downside

Guide references for Section 1302.8 include an indemnification concept, where the Seller or Servicer agrees to indemnify Freddie Mac for liabilities arising from AI and machine learning use.

Translation: If your AI causes harm, it is not Freddie’s problem. It is yours.

10) Training for AI enabled threats

Updated security training language highlights AI powered threats like deepfakes and targeted phishing and threats to AI systems like prompt injection.

Translation: People cannot safely run AI systems they do not understand. Train your teams.

So Is AI Attainable in Mortgage

Yes. But only if you stop thinking of AI as an app and start thinking of it as a controlled production system.

A good mental model is this:

Mortgage AI should behave like a regulated employee

If a human underwriter does work, you expect:

- training

- a playbook

- supervision

- quality control

- documented decisions

- escalation paths

AI needs the same stuff, just in software form.

The Trap: AI Everywhere Versus AI Where It Is Defensible

A lot of lenders get stuck because they jump straight to the highest risk uses, like underwriting decisions, credit, pricing, and eligibility. That is where the rules are tightest and the scrutiny is loudest.

Or, they get enamored by a vendor that has really cool AI and they don’t bother to ask the hard questions about how it works and how it is maintained.

A smarter path is to start with AI that improves speed and quality without making final decisions, like:

- document classification and validation, flag exceptions but do not override the file

- summarizing findings for humans, not replacing their judgment

- drafting borrower communications with templates and approvals

- workflow prioritization, what should ops touch next

Automate the repetitive work, route exceptions, and keep humans in control.

What Compliant AI Looks Like in Real Life

If you want AI in a regulated lender, build around these guardrails:

Keep the AI on a leash

- Humans approve anything that impacts borrower outcomes, at least at first

- AI makes recommendations, humans make decisions

Log everything like you are already in an audit

- what data came in

- what did the model output

- what did the user do with it

- what version was running

Segment by risk

Create tiers:

- low risk: drafting, searching internal knowledge, summarizing

- medium risk: exception detection, workflow routing

- high risk: underwriting, pricing, eligibility decisions

Different tiers means different testing, access controls, monitoring, and approvals.

Treat vendors like part of your control environment

If a vendor says trust us, that is adorable.

You still need:

- security review

- model governance expectations

- audit rights or audit evidence

- clear data handling terms

- incident response commitments

Freddie’s direction reinforces that lenders must have strong policies and oversight, not just a vendor contract.

The Big Answer

AI is attainable in mortgage, but attainable means governed

If your AI strategy is:

- documented

- monitored

- auditable

- secure

- fair lending aware

- and owned by leadership

Then yes, you can use AI and still sleep at night.

Think About It

If Freddie Mac expects you to map, measure, and manage AI risk, here is the executive question that cuts through the noise:

Where do we need speed, and where do we need proof?

Then build AI around both.

Speed without proof creates repurchase risk.

Proof without speed creates cost per loan pain.

The winning lenders will treat AI like operational infrastructure: fast and defensible.

Want to learn more about how to ensure you’re using AI responsibly? Drop us a note!